I have had some Xbee radios for some time but not got around to doing anything with them.

I have three Xbee radios. They are all Series 2 Pro, S2B devices, with PCB antennas. Series 2 are harder to configure than Series 1, but run a full Zigbee implementation including the Zigbee API.

The Pro devices have longer range than the standard ones but use more power. The ones with the PCB antennas are the cheapest, but don’t have the range that an external antenna gives.

There are newer S2C devices that support on-board programming and the Home Automation profile. They are a bit more expensive. The S2B radios I have cost about £11 on ebay.

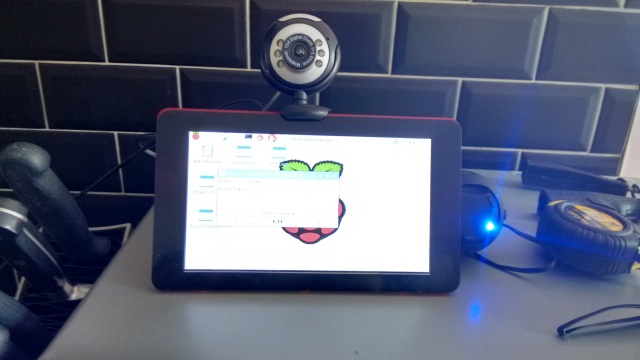

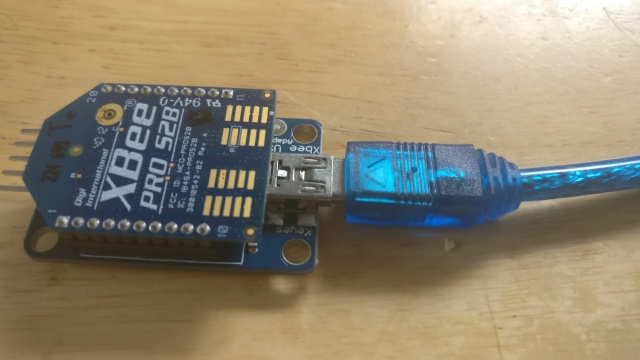

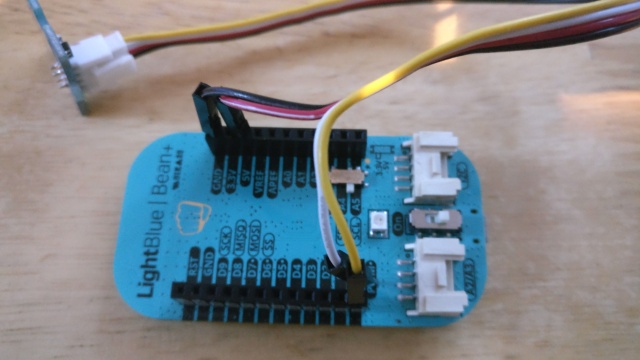

The device in the picture is mounted on a cheap USB adapter. That allows it to be programmed by the Digi XCTU software or via AT commands from a serial connection.

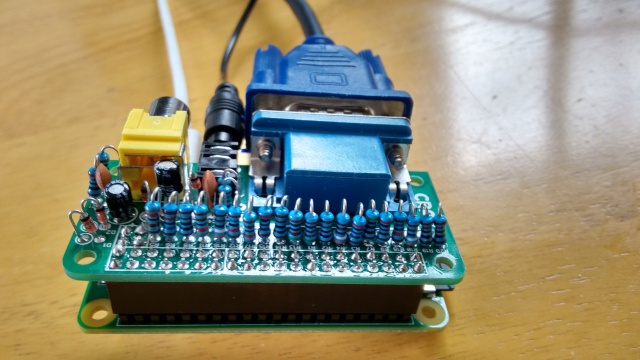

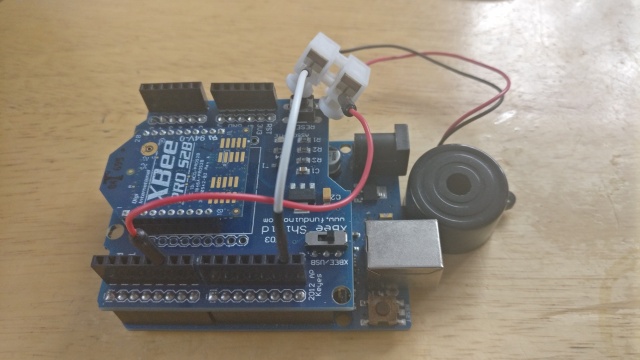

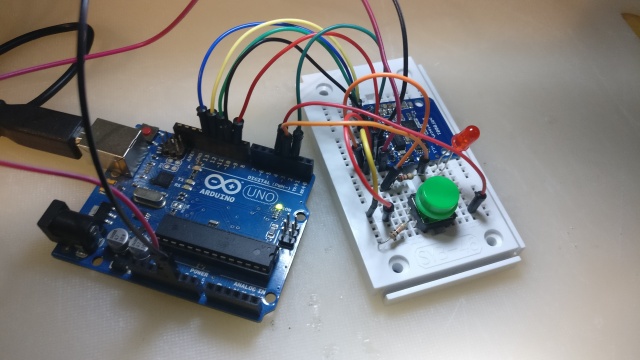

I also have a couple of Arduino shields

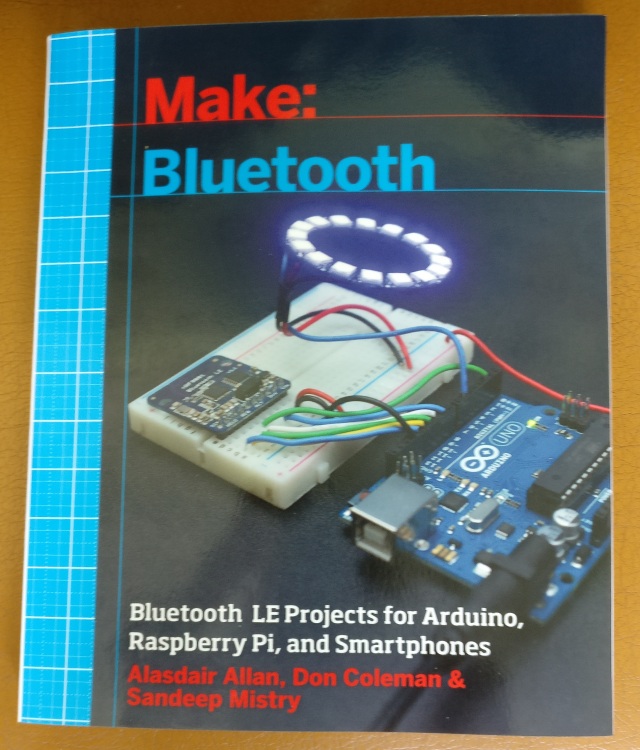

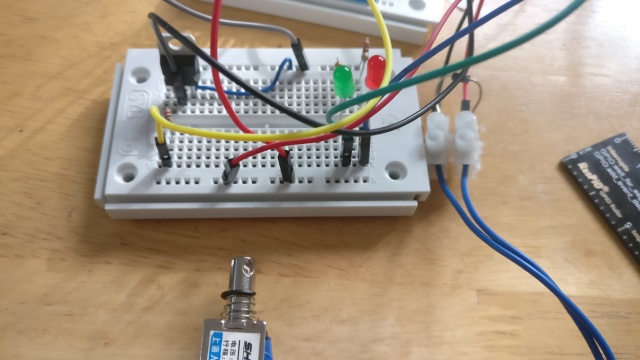

The picture shows a buzzer connected to the Arduino, as I was trying out the projects from this book:

The book is rather old but seems to be the best for practical projects with Series 2 radios, as well as having a lot of information on the AT commands supported and the Zigbee API.

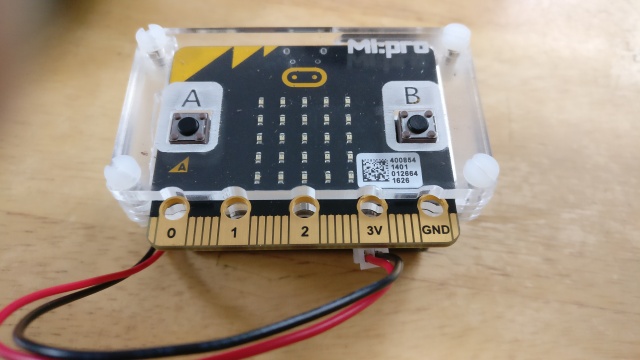

I had not realised that the Xbee modules allowed the creation of sensors and actuators without a separate microcontroller such as an Arduino.

I have a couple of breadboard adapters for the Xbee radios on order, so I can try the sensor and actuator projects from the book that don’t require an Arduino.

Zigbee networks are mesh networks but rather complex. They have a coordinator node and router and end point nodes. Coordinators and routers are usually mains powered, but end points can be battery powered and made to sleep most of the time.

Configuring Xbees is complex as there are lots of firmware options, and lots of routing, security and network options.

The firmware comes in two main options for each of the types of nodes. There is an AT variant that supports AT commands and transparent mode, and an API variant that supports a structured data protocol.

The firmware is uploaded and configured with the Digi XCTU software.

On top of the API lots of profiles are built, including the Smart Energy Profile and the Home Automation Profile. The profiles support different clusters, which I think are a bit like BLE services. The profiles have different security and encryption requirements. It is all very complex.

I have several commercial Zigbee devices in my house, including my Scottish Power smart meter:

and its display device:

I believe these devices support the Zigbee Smart Energy Profile. That profile has high security requirements and I don’t think it would be possible to get a home made device to connect to that network. I suspect that the smart meter is a coordinator and the display device is an end device.

The Smart Energy profile should be able to support devices obtaining price information from the meter so that they can switch on or off depending on the price of electricity. For example your thermostat could cool your house more when electricity was cheap. Or your EV car charger could switch on when electricity was cheap and abundant. Or perhaps it could provide energy to the grid when electricity was expensive and in demand. It is a pity, but I don’t think I will be able to do any of this with DIY devices. Any devices doing that would need to be certified and installed with the appropriate keys.

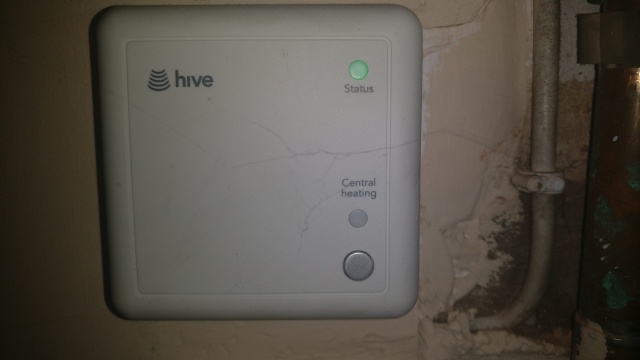

The other commercial Zigbee devices that I have are a Hive thermostat:

and its boiler controller:

and its Internet hub:

I am not sure what profile or profiles the Hive devices support. They may support the Home Automation profile or the Smart Energy Profile or possibly both. There is little information available on them. I suspect that the boiler is the coordinator for the network, the thermostat is an end device and the hub is a router or an end device.

There are other devices in the Hive range such as motion sensors, and contact sensors. They do support the Home Automation profile, as people have them working with a Smartthings Zigbee hub.

It seems unlikely that I could get a home made device to connect to the Hive heating Zigbee network. It would be nice to control the receiver directly rather than via the hub.

It would make sense that the Hive thermostat supported the Smart Energy profile so it could look at the price of electricity, but I don’t think anything like that has been rolled yet.

It is a pity that I have these Zigbee networks that I cannot connect to.

So, it is worth me setting up my own Zigbee network with home made wireless sensors? I suspect not, as it is all too complex involving coordinators, end devices and possibly routers.

If I had a Smartthings hub, which already works as a coordinator for Zigbee HA profile end devices, it might be worth building or buying Zigbee sensors or actuators, but currently I don’t want to buy yet another hub device.

Phones do not currently support Zigbee but that could change if Google’s Thread ever supports Zigbee and Android phones support Thread.

As Thread is based on 6Lowpan which uses the same radio standard as Zigbee, support for Zigbee by Thread is possible, and there have been reports that it might be coming. Phones would have to include radios that support IEEE 802.15.4 radios, but that will presumably come if and when they support Thread.

It looks like the Amazon Echo Plus with a hub supports Zigbee. I am more likely to get one of those, than a Smarttthings hub, so that could change whether it is worthwhile me building or buying any Zigbee Home Automation profile devices.

I currently have an Echo Show on order to replace the Echo in my kitchen. That does not support the hub functionality. Where would I put the Echo Plus? The Living room is a possibility, but I have a Google Home in the living room. Decisions, decisions.

I bought a Pebble Time Steel a few weeks ago when the price dropped, and have just started looking at creating my own apps and watch faces for it.

I bought a Pebble Time Steel a few weeks ago when the price dropped, and have just started looking at creating my own apps and watch faces for it.